DeepSeek has unveiled its first-generation DeepSeek-R1 and DeepSeek-R1-Zero models that are designed to tackle complex reasoning tasks.

DeepSeek-R1-Zero is trained solely through large-scale reinforcement learning (RL) without relying on supervised fine-tuning (SFT) as a preliminary step. According to DeepSeek, this approach has led to the natural emergence of “numerous powerful and interesting reasoning behaviours,” including self-verification, reflection, and the generation of extensive chains of thought (CoT).

“Notably, [DeepSeek-R1-Zero] is the first open research to validate that reasoning capabilities of LLMs can be incentivised purely through RL, without the need for SFT,” DeepSeek researchers explained. This milestone not only underscores the model’s innovative foundations but also paves the way for RL-focused advancements in reasoning AI.

However, DeepSeek-R1-Zero’s capabilities come with certain limitations. Key challenges include “endless repetition, poor readability, and language mixing,” which could pose significant hurdles in real-world applications. To address these shortcomings, DeepSeek developed its flagship model: DeepSeek-R1.

Introducing DeepSeek-R1

DeepSeek-R1 builds upon its predecessor by incorporating cold-start data prior to RL training. This additional pre-training step enhances the model’s reasoning capabilities and resolves many of the limitations noted in DeepSeek-R1-Zero.

Notably, DeepSeek-R1 achieves performance comparable to OpenAI’s much-lauded o1 system across mathematics, coding, and general reasoning tasks, cementing its place as a leading competitor.

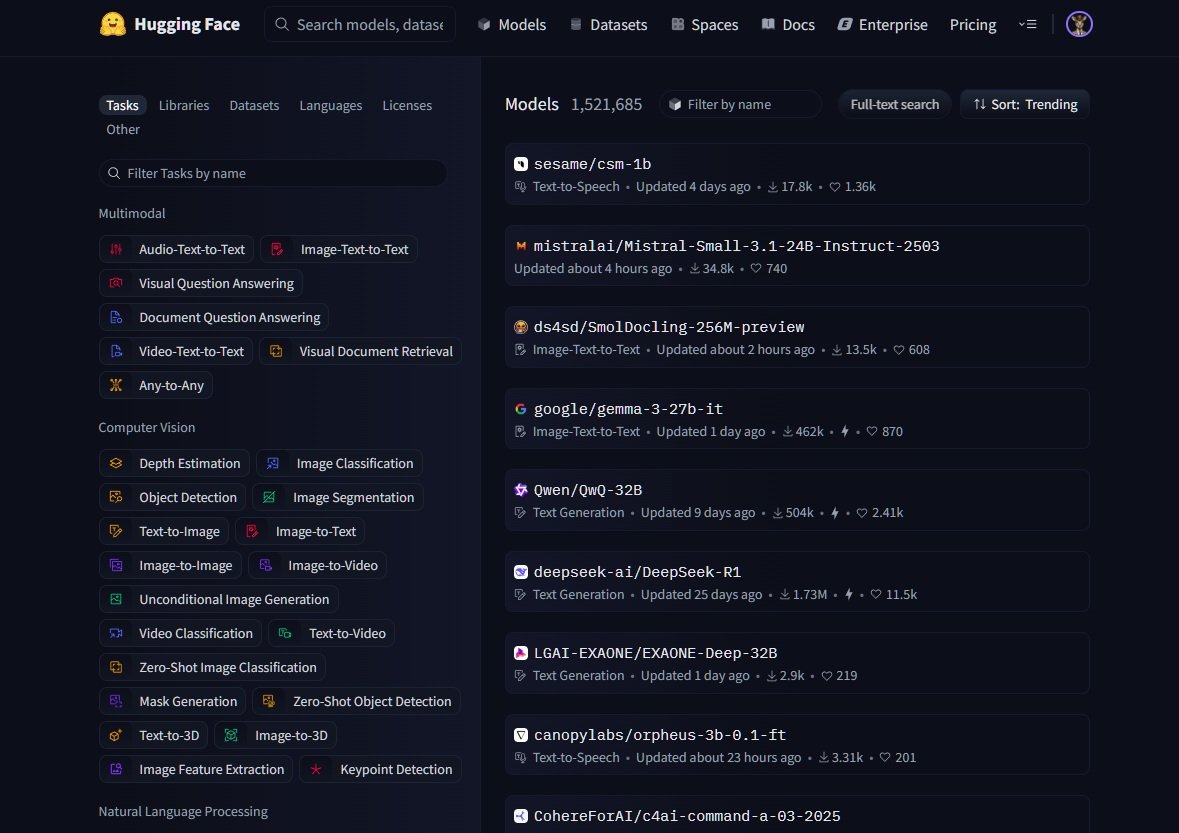

DeepSeek has chosen to open-source both DeepSeek-R1-Zero and DeepSeek-R1 along with six smaller distilled models. Among these, DeepSeek-R1-Distill-Qwen-32B has demonstrated exceptional results—even outperforming OpenAI’s o1-mini across multiple benchmarks.

MATH-500 (Pass@1): DeepSeek-R1 achieved 97.3%, eclipsing OpenAI (96.4%) and other key competitors.

LiveCodeBench (Pass@1-COT): The distilled version DeepSeek-R1-Distill-Qwen-32B scored 57.2%, a standout performance among smaller models.

AIME 2024 (Pass@1): DeepSeek-R1 achieved 79.8%, setting an impressive standard in mathematical problem-solving.

A pipeline to benefit the wider industry

DeepSeek has shared insights into its rigorous pipeline for reasoning model development, which integrates a combination of supervised fine-tuning and reinforcement learning.

According to the company, the process involves two SFT stages to establish the foundational reasoning and non-reasoning abilities, as well as two RL stages tailored for discovering advanced reasoning patterns and aligning these capabilities with human preferences.

“We believe the pipeline will benefit the industry by creating better models,” DeepSeek remarked, alluding to the potential of their methodology to inspire future advancements across the AI sector.

One standout achievement of their RL-focused approach is the ability of DeepSeek-R1-Zero to execute intricate reasoning patterns without prior human instruction—a first for the open-source AI research community.

Importance of distillation

DeepSeek researchers also highlighted the importance of distillation—the process of transferring reasoning abilities from larger models to smaller, more efficient ones, a strategy that has unlocked performance gains even for smaller configurations.

Smaller distilled iterations of DeepSeek-R1 – such as the 1.5B, 7B, and 14B versions – were able to hold their own in niche applications. The distilled models can outperform results achieved via RL training on models of comparable sizes.

🔥 Bonus: Open-Source Distilled Models!

🔬 Distilled from DeepSeek-R1, 6 small models fully open-sourced📏 32B & 70B models on par with OpenAI-o1-mini🤝 Empowering the open-source community

🌍 Pushing the boundaries of **open AI**!

🐋 2/n pic.twitter.com/tfXLM2xtZZ

— DeepSeek (@deepseek_ai) January 20, 2025

For researchers, these distilled models are available in configurations spanning from 1.5 billion to 70 billion parameters, supporting Qwen2.5 and Llama3 architectures. This flexibility empowers versatile usage across a wide range of tasks, from coding to natural language understanding.

DeepSeek has adopted the MIT License for its repository and weights, extending permissions for commercial use and downstream modifications. Derivative works, such as using DeepSeek-R1 to train other large language models (LLMs), are permitted. However, users of specific distilled models should ensure compliance with the licences of the original base models, such as Apache 2.0 and Llama3 licences.

(Photo by Prateek Katyal)

See also: Microsoft advances materials discovery with MatterGen

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.